Celeritas code will accelerate high energy physics simulations with supercomputers

Scientists at the Department of Energy’s Oak Ridge National Laboratory are leading a new project to ensure that the fastest supercomputers can keep up with big data from high energy physics research.

“For scientific big data, this is one of the largest challenges in the world,” said Marcel Demarteau, director of ORNL’s Physics Division and principal investigator of the project, which first aims to address a data tsunami that will arise from a major upgrade to the world’s most powerful particle accelerator, the Large Hadron Collider, or LHC. “Each of its largest particle detectors will be capable of streaming 50 terabits per second—the data equivalent to watching 10 million high-definition Netflix movies concurrently.”

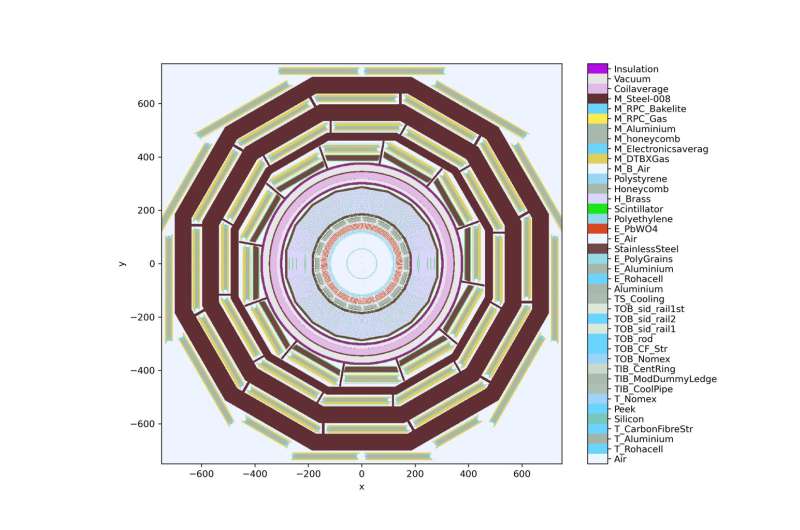

The LHC sits deep underground at the European Organization for Nuclear Research, or CERN, located on the border between Switzerland and France. Smashing protons and heavier nuclei, the LHC produces progeny particles that its detectors track. The detectors generate enormous amounts of data that is compared against simulations so that experiments can validate theories. The knowledge gained improves our understanding of fundamental forces.

Researchers expect the upgraded particle accelerator, named the High-Luminosity LHC, to begin operations in 2029. Luminosity measures how tightly packed particles are as they zip through the accelerator and collide. Higher luminosity means more particle collisions. The upgraded LHC promises discoveries but at a cost: it will create more data than simulations can manage.

“The High-Luminosity LHC will boost the number of proton collisions to 10 times what the LHC can produce,” Demarteau said.

To address this challenge, partners in the new project are developing a simulation code called Celeritas—the Latin word for speed. Current simulation codes work by calculating the particles’ electromagnetic interactions as they move through the detectors. To vastly increase the data throughput from high-fidelity simulations of high energy physics experiments, Celeritas will use new algorithms that employ graphics processing units for massive parallel processing on leadership-class computing platforms such as ORNL’s Frontier. The world’s first exascale computer, Frontier can perform a quintillion calculations per second. In other words, it can complete a task in one second that would take the entire global population more than four years if each person could complete one calculation every second.

Celeritas is one of five projects that DOE’s Office of Science is funding to accelerate high energy physics discoveries through high-performance computing. Its Advanced Scientific Computing Research and High Energy Physics offices support the project through a program called Scientific Discovery through Advanced Computing, or SciDAC.

“Celeritas is an important step in reworking the entire way computational simulations and analyses are done in the high energy physics ecosystem,” said ORNL’s Tom Evans. He will lead the multilaboratory project, which includes scientists at Argonne National Laboratory and Fermi National Accelerator Laboratory, or Fermilab.

Evans also leads ORNL’s High-Performance Computing Methods for Nuclear Applications Group and spearheads applications development related to the nation’s energy portfolio for DOE’s Exascale Computing Project. He uses Monte Carlo techniques that rely on repeated random sampling to step each particle through a virtual world and simulate the history of its movement.

“Think of it as a dice-rolling game, where we simulate particle tracks based on the best available physical models of their interactions with matter,” said Evans, who works with ORNL’s Seth Johnson developing methods and tools to optimize Celeritas. “We simulate tracks crossing a detector and compare them with data that comes out of the detector. These correlations are used to validate theories of the Standard Model of particle physics.”

Currently, the Worldwide LHC Computing Grid manages the storage, distribution and analysis of LHC data. Its 13 largest sites, including two in the United States, at DOE’s Brookhaven National Laboratory and Fermilab, connect via high-speed networks. The open science grid’s distributed computing infrastructure provides more than 12,000 physicists worldwide with near real-time access to data and the power to process it.

“We want to incorporate DOE’s leadership-class computing facilities into this network to bring to bear their rich computing power and resources,” Evans said. Now, neither the Argonne Leadership Computing Facility at Argonne National Laboratory nor the Oak Ridge Leadership Computing Facility at ORNL is part of this network.

At RAPIDS2, the SciDAC Institute for Computer Science, Data and Artificial Intelligence, Celeritas collaborators also will develop workflow tools to make DOE’s federated computing facilities compatible with high energy physics computing centers. ORNL’s Fred Suter and Stefano Tognini work with Scott Klasky, leader of the lab’s Workflow Systems Group, to integrate advanced tools and technologies that reduce the burden of high data traffic in and out of processors.

“As high-performance computing resources continue to grow in computational capability, we have seen much less growth in their storage bandwidth and capacity. That means that we must continue to optimize workflows to keep up with this imbalance,” Klasky said. “This is absolutely necessary for scientific discovery, especially as we move to the Worldwide LHC Computing Grid. The conventional techniques that they have used there just will not be sufficient anymore for this compute challenge.”

The team’s first goal is to simulate the LHC’s Compact Muon Solenoid detector on ORNL supercomputers. Researchers at this experiment are eager to integrate Celeritas into their existing software framework. “They’ve given us our first major target—modeling a subcomponent of the Compact Muon Solenoid detector called the high-granularity calorimeter,” Evans said. Demarteau added, “It is a complex state-of-the-art detector for measuring energies. It is a highly sophisticated detector and an ideal test case for Celeritas.”

Celeritas has important consequences for analyzing data from detector experiments at CERN, but its success will also serve experiments elsewhere. “Neutrino experiments like the Deep Underground Neutrino Experiment again employ a massive detector to detect neutrino interactions,” said Demarteau, calling out an experiment in the Midwest. “This tool will also enable new insights about interactions of neutrinos with matter.”

Citation:

Celeritas code will accelerate high energy physics simulations with supercomputers (2022, December 14)

retrieved 14 December 2022

from https://techxplore.com/news/2022-12-celeritas-code-high-energy-physics.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.