What is the one thing that most photographers wish for? Perhaps to get a less-noisy low-light picture, one can see in the dark. There might be some time before we can achieve this with hardware, but Google’s research divisions’ open source project, MultiNeRF, could change low-light photography sooner than we expect.

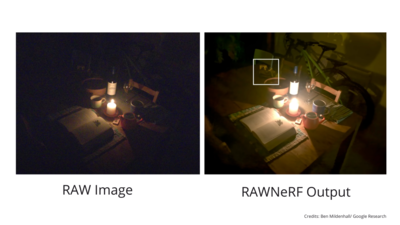

Google Research’s RAWNeRF, a part of the multiNeRF research, promises results that put it light years ahead of any other noise-reduction tool. The RawNeRF tool can read images and, using artificial intelligence, adds higher levels of detail to photos taken in darker and low-light conditions.

“When optimised over many noisy raw inputs, NeRF produces a scene representation so accurate that its rendered novel views outperform dedicated single and multi-image deep raw denoisers run on the same wide baseline input images,” reads a Cornell University paper.

What is NeRF

NeRF is a neural network tool capable of reconstructing accurate 3D renders from a set of images. As per one of the researchers at Google, Ben Mildenhall, the NeRF is built to work best with well-lit scenarios.

How does MultiNeRF improve on NeRF’s shortcomings?

However, when tried with images taken in low-light conditions, the results are noisier and compromise on details. The issue could be solved with denoising tools, resulting in further loss of details.

Meanwhile, in the RAWNeRF, the algorithms are run on RAW images, and AI is tasked to reduce the noise captured by the sensors while maintaining the detail, let us see in the dark.

Google says that the RAWNeRF is more capable of reducing noise. It can change the camera position to view the scene from different angles, or change exposure, tone map, and focus “with accurate bokeh effects”.

FacebookTwitterInstagramKOO APPYOUTUBE

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.