Key to resilient energy-efficient AI may reside in human brain

A clearer understanding of how a type of brain cell known as astrocytes function and can be emulated in the physics of hardware devices, may result in artificial intelligence (AI) and machine learning that autonomously self-repairs and consumes much less energy than the technologies currently do, according to a team of Penn State researchers.

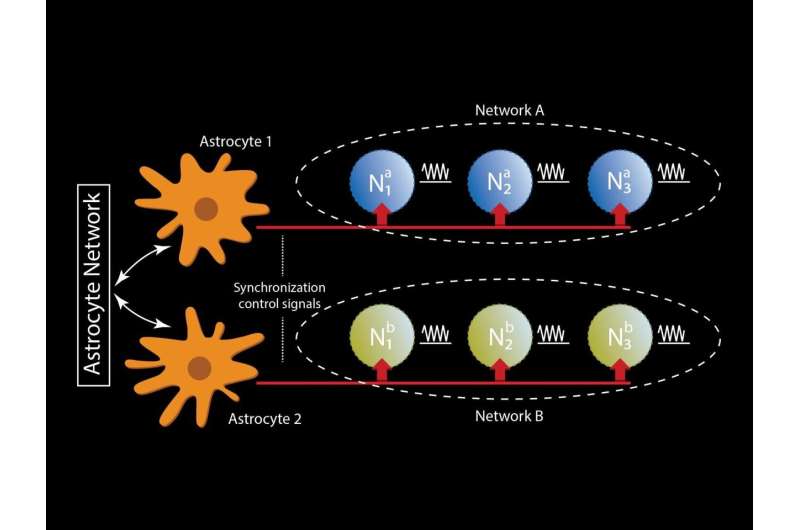

Astrocytes are named for their star shape and are a type of glial cell, which are support cells for neurons in the brain. They play a crucial role in brain functions such as memory, learning, self-repair and synchronization.

“This project stemmed from recent observations in computational neuroscience, as there has been a lot of effort and understanding of how the brain works and people are trying to revise the model of simplistic neuron-synapse connections,” said Abhronil Sengupta, assistant professor of electrical engineering and computer science. “It turns out there is a third component in the brain, the astrocytes, which constitutes a significant section of the cells in the brain, but its role in machine learning and neuroscience has kind of been overlooked.”

At the same time, the AI and machine learning fields are experiencing a boom. According to the analytics firm Burning Glass Technologies, demand for AI and machine learning skills is expected to increase by a compound growth rate of 71% by 2025. However, AI and machine learning faces a challenge as the use of these technologies increase—they use a lot of energy.

“An often-underestimated issue of AI and machine learning is the amount of power consumption of these systems,” Sengupta said. “A few years back, for instance, IBM tried to simulate the brain activity of a cat, and in doing so ended up consuming around a few megawatts of power. And if we were to just extend this number to simulate brain activity of a human being on the best possible supercomputer we have today, the power consumption would be even higher than megawatts.”

All this power usage is due to the complex dance of switches, semiconductors and other mechanical and electrical processes that happens in computer processing, which greatly increases when the processes are as complex as what AI and machine learning demand. A potential solution is neuromorphic computing, which is computing that mimics brain functions. Neuromorphic computing is of interest to researchers because the human brain has evolved to use much less energy for its processes than do a computer, so mimicking those functions would make AI and machine learning a more energy-efficient process.

Another brain function that holds potential for neuromorphic computing is how the brain can self-repair damaged neurons and synapses.

“Astrocytes play a very crucial role in self-repairing the brain,” Sengupta said. “When we try to come up with these new device structures, we try to form a prototype artificial neuromorphic hardware, these are characterized by a lot of hardware-level faults. So perhaps we can draw insights from computational neuroscience based on how astrocyte glial cells are causing self-repair in the brain and use those concepts to possibly cause self-repair of neuromorphic hardware to repair these faults.”

Sengupta’s lab primarily works with spintronic devices, a form of electronics that process information via spinning electrons. The researchers examine the devices’ magnetic structures and how to make them neuromorphic by mimicking various neural synaptic functions of the brain in the intrinsic physics of the devices.

This research was part of a study published in January in Frontiers in Neuroscience. That research, in turn, resulted in the study recently published in the same journal.

“When we started working on the aspects of self-repair in the previous study, we realized that astrocytes also contribute to temporal information binding,” Sengupta said.

Temporal information binding is how the brain can make sense of relations between separate events happening at separate times, and making sense of these events as a sequence, which is an important function of AI and machine learning. “It turns out that the magnetic structures we were working with in the prior study can be synchronized together through various coupling mechanisms, and we wanted to explore how you can have these synchronized magnetic devices mimic astrocyte-induced phase coupling, going beyond prior work on solely neuro-synaptic devices,” Sengupta said. “We want the intrinsic physics of the devices to mimic the astrocyte phase coupling that you have in the brain.”

To better understand how this might be achieved, the researchers developed neuroscience models, including those of astrocytes, to understand what aspects of astrocyte functions would be most relevant for their research. They also developed theoretical modeling of the potential spintronic devices.

“We needed to understand the device physics and that involved a lot of theoretical modeling of the devices, and then we looked into how we could develop an end-to-end, cross-disciplinary modeling framework including everything from neuroscience models to algorithms to device physics,” Sengupta said.

Creating such energy-efficient and fault-resilient “astromorphic computing” could open the door for more sophisticated AI and machine learning work to be done on power-constrained devices such as smartphones.

“AI and machine learning is revolutionizing the world around us every day, you see it from your smartphones recognizing pictures of your friends and family, to machine learning’s huge impact on medical diagnosis for different kinds of diseases,” Sengupta said. “At the same time, studying astrocytes for the type of self-repair and synchronization functionalities they can enable in neuromorphic computing is really in its infancy. There’s a lot of potential opportunities with these kinds of components.”

Along with Sengupta, researchers in the first paper released in January, “On the Self-Repair Role of Astrocytes in STDP Enabled Unsupervised SNNs,” include Mehul Rastogi, former research intern in the Neuromorphic Computing Lab; Sen Lu, graduate research assistant in computer science; and Nafiul Islam, graduate research assistant in electrical engineering. Along with Sengupta, researchers in the paper released in October, “Emulation of Astrocyte Induced Neural Phase Synchrony in Spin-Orbit Torque Oscillator Neurons,” include Umang Garg, who was a research intern at Penn State during the study, and Kezhou Yang, doctoral candidate in material science.

Umang Garg et al, Emulation of Astrocyte Induced Neural Phase Synchrony in Spin-Orbit Torque Oscillator Neurons, Frontiers in Neuroscience (2021). DOI: 10.3389/fnins.2021.699632

Mehul Rastogi et al, On the Self-Repair Role of Astrocytes in STDP Enabled Unsupervised SNNs, Frontiers in Neuroscience (2021). DOI: 10.3389/fnins.2020.603796

Umang Garg et al, Emulation of Astrocyte Induced Neural Phase Synchrony in Spin-Orbit Torque Oscillator Neurons, Frontiers in Neuroscience (2021). DOI: 10.3389/fnins.2021.699632

Citation:

Key to resilient energy-efficient AI may reside in human brain (2021, November 1)

retrieved 1 November 2021

from https://techxplore.com/news/2021-11-key-resilient-energy-efficient-ai-reside.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.