Researchers give robots better tools to manage conflicts in dialogues

A new thesis from Umeå University shows how robots can manage conflicts and knowledge gaps in dialogues with people. By understanding the reasons behind dialogues that don’t unfold as expected, researchers have developed strategies and mechanisms that could be important when living side by side.

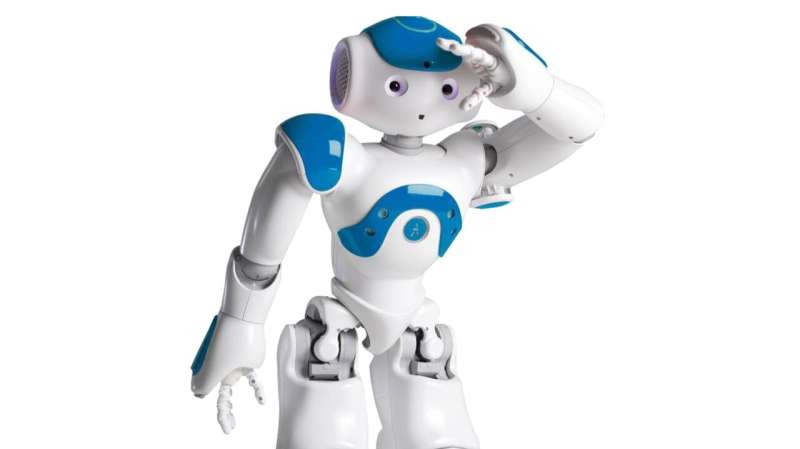

In the not-too-distant future, we may wake up to a world where a helpful robot, “Robbie,” has been seamlessly integrated into our everyday lives. The ability to hold meaningful dialogues sets Robbie apart from other machines. But when Robbie and the human focus on different things or lack common knowledge, continuing the dialogue can become difficult.

Maitreyee Tewari, doctoral student at the Department of Computing Science, found that problems and conflicts in dialogues relates to four themes: expectations, understanding, relating and interacting.

“Take, for example, a dialogue where Robbie asks: ‘Why are you not feeling well?’ The person says, ‘I don’t want to discuss it. I’ll probably feel better after some breakfast.’ Humans can manage such situations effortlessly. However, for robots like Robbie, it’s still an open research problem,” she says.

Tewari has identified different strategies, which Robbie could apply to manage conflicts when collaborating with people to support them in achieving their goals. This may involve adapting or to persuade the person. For example, it could be important for an older adult living alone to take the medicines on time and contact the health care professional if they are not feeling well. In these situations, to act “intelligently,” Robbie should have knowledge about the situation, be able to reason about the consequences of different choices, evaluate the effect, and how the person reacts to Robbie’s suggestions.

These findings can benefit the designers intending to build dialogue capability in Robbie-like robots cohabiting in people’s social environments like our homes or care facilities. It can help robots like Robbie to overcome conflicts, develop new knowledge in collaboration with people and manage dialogues in the future, in a way similar to how a human does, and in a way that would feel natural to humans.

Tewari’s studies showed that people related to the robot as if it was another person. For example, study participants compared robots with children and expected people to have patience with them.

“It was surprising to see that older people were more open-minded, empathic and accepting towards robots compared to younger people,” says Tewari.

A study also showed that during conflicts, if the robot or the person partially adapts to what the other wants to talk about, it seems to be a good enough solution from the human perspective, which may be interesting to study further.

“Dialogues that may seem simple to humans, are rich and complex from an AI perspective, requiring understanding at multiple levels, which today’s agents like Robbie cannot build. Therefore, more research is needed in this direction,” says Tewari.

More information:

Breakdown situations in dialogues between humans and socially intelligent agents. umu.diva-portal.org/smash/record.jsf?pid=diva2%3A1812696&dswid=7358

Citation:

Researchers give robots better tools to manage conflicts in dialogues (2023, December 11)

retrieved 12 December 2023

from https://techxplore.com/news/2023-12-robots-tools-conflicts-dialogues.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.