Robot stand-in mimics your movements in VR

Researchers from Cornell and Brown University have developed a souped-up telepresence robot that responds automatically and in real-time to a remote user’s movements and gestures made in virtual reality.

The robotic system, called VRoxy, allows a remote user in a small space, like an office, to collaborate via VR with teammates in a much larger space. VRoxy represents the latest in remote, robotic embodiment from researchers in the Cornell Ann S. Bowers College of Computing and Information Science.

“The great benefit of virtual reality is we can leverage all kinds of locomotion techniques that people use in virtual reality games, like instantly moving from one position to another,” said Mose Sakashita, a doctoral student in the field of information science. “This functionality enables remote users to physically occupy a very limited amount of space but collaborate with teammates in a much larger remote environment.”

Sakashita is the lead author of “VRoxy: Wide-Area Collaboration From an Office Using a VR-Driven Robotic Proxy,” to be presented at the ACM Symposium on User Interface Software and Technology (UIST), held Oct. 29 through Nov. 1 in San Francisco.

VRoxy’s automatic, real-time responsiveness is key for both remote and local teammates, researchers said. With a robot proxy like VRoxy, a remote teammate confined to a small office can interact in a group activity held in a much larger space, like in a design collaboration scenario.

For teammates, the VRoxy robot automatically mimics the user’s body position and other vital nonverbal cues that are otherwise lost with telepresence robots and on Zoom. For instance, VRoxy’s monitor—which displays a rendering of the user’s face—will tilt accordingly depending on the user’s focus.

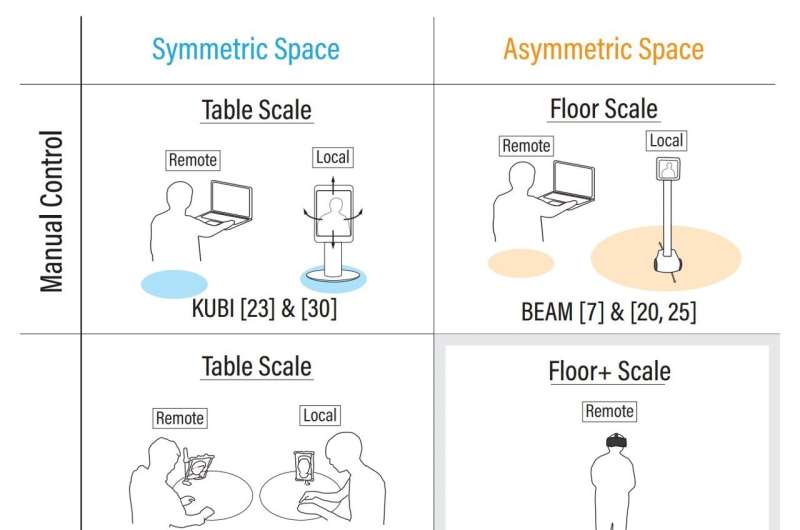

VRoxy builds off a similar Cornell robot called ReMotion, which worked only if both the local and remote users had the same hardware and identically sized workspaces. That’s changed with VRoxy. The system maps small movements from the remote users in VR to larger movements in the physical space, researchers said.

VRoxy is equipped with a 360-degree camera, a monitor that displays facial expressions captured by the user’s VR headset, a robotic pointer finger and omnidirectional wheels.

Donning a VR headset, a VRoxy user has access to two view modes: Live mode shows an immersive image of the collaborative space in real time for interactions with local collaborators, while navigational mode displays rendered pathways of the room, allowing remote users to “teleport” to where they’d like to go. This navigation mode allows for quicker, smoother mobility for the remote user and limits motion sickness, researchers said.

The system’s automatic nature lets remote teammates focus solely on collaboration rather than on manually steering the robot, researchers said.

In future work, Sakashita wants to supercharge VRoxy with robotic arms that would allow remote users to interact with physical objects in the live space via the robot proxy. Elsewhere, he envisions VRoxy doing its own mapping of a space, much like a Roomba vacuum cleaner. Currently, the system relies on ceiling markers to aid the robot in navigating a room. The extension to support real-time mapping could allow deployment of VRoxy in a classroom, he said.

More information:

Paper: infosci.cornell.edu/~mose/pape … s/UIST2023_VRoxy.pdf

Citation:

Robot stand-in mimics your movements in VR (2023, October 26)

retrieved 27 October 2023

from https://techxplore.com/news/2023-10-robot-stand-in-mimics-movements-vr.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.